Justice-by-algorithm is all the rage across the United States -- and it is soon to sweep into Colorado. That is, unless judges, lawyers, state legislators and the public get wise to the reality behind this so-called better system of criminal justice.

Using big data, fancy math and computers, the premise behind the push to use risk assessment algorithms is they can accurately predict human behavior. Theoretically, they should help judges make better decisions as to who should be eligible for bail and who stays behind bars. Criminal justice reformers in Colorado, just as they have nationally, are currently advocating to replace what they say is the antiquated money bail system with these state-of-the-art risk computers.

When fully implemented, judges will be able to lock up and throw away the key on individuals whom the algorithm declares as dangerous, while everyone else receives a get-out-of-jail-free card.

But are these tools what they're cracked up to be? And what comes with the deal for any jurisdiction that chooses the algorithm path to justice?

With the conversation on Colorado bail reform expected to start up again, I researched the current status in our state, especially since risk algorithms will likely be at the center of these efforts (or if there is a complete shift to a no-money bail system).

In 2012, the state developed what is called the Colorado Pretrial Risk Assessment Tool (“CPAT”). Currently used in about a dozen counties, I submitted a series of open records requests to investigate the validity of the tool, whether or not it is racially biased and if it is transparent.

I started with Weld County and contacted Doug Erler, Director of the Pretrial Services Department. I asked for the validation or revalidation report, but was told the county did not have them. As unbelievable as it sounds, it means, quite simply, the tool is not valid. According to national best practices, validation is an absolute requirement. Although there appeared to be an attempt to revalidate the CPAT tool, it is not expected to be completed for at least another year.

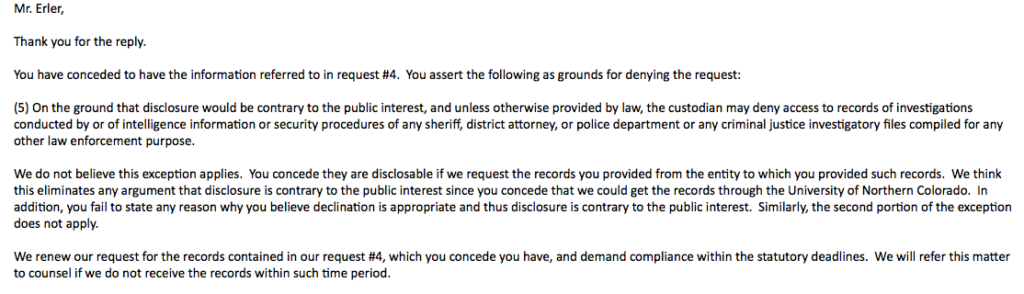

The tool is also not completely transparent. I asked to see the underlying data behind the tool, i.e., the records Weld County provided to build it. However, Erler denied that request, claiming that to do so was not in the public interest. This means that contrary to the recommendations of national academics and experts, the CPAT tool is indeed a black-box algorithm and completely secret to the outside world. The public, criminal defendants and even prosecutors have absolutely no ability to test the statistical analyses or verify accuracy of the data.

As for racial bias, algorithms are not the panacea they have been claimed to be. While the majority of people would agree that our current pretrial release system is negatively biased against racial minorities, many civil rights groups feel that algorithms are also biased. Because they work by taking a snapshot of the present, practically speaking, they lock the future into the past, resulting in a vicious cycle.

When various risk algorithms have been tested for racial (and gender) bias, the results have been mixed. It seems to depend on the particular algorithm being used, which begs the question: is the risk algorithm used by Weld County biased? The answer is, we don't know.

It seems that the algorithm behind the CPAT tool was never tested for racial bias or bias against protected classes. The original validation report from the CPAT in 2012 contains absolutely no mention of this kind of testing at all. From all available evidence, it is clear that the algorithm was never checked for bias using any of the accepted methodologies for doing so. This is not some minor oversight -- it is a potential federal civil rights violation that no one has ever addressed.

The problems associated with use of risk assessment are not easily corrected. Nor are they restricted to Colorado. In fact, last August, the nationwide risk assessment movement hit a brick wall. 110 national civil rights groups that are part of the Leadership Conference on Civil and Human Rights, including the NAACP and ACLU, issued a statement calling on local jurisdictions to stop using bail risk assessment algorithms. It cited problems relating to racial bias, lack of scientific validity and the general lack of transparency, which turned the algorithms into so-called black-box systems. Sound familiar? Their statement was a stunning rebuke of a key element of the so-called no-money bail system.

Since that time, I have sought and obtained records from around the nation. They have demonstrated that the science behind the algorithms is extremely weak. They also create a system which defendants cannot challenge, while wholly lacking in transparency.

More than just theory, there are numerous real-world examples in which risk assessment algorithms actually magnify the racial basis that is already inherent in the system. For example, national best practices require that the algorithms be revalidated every 18-24 months. Despite that, Ohio for example, utilizes a series of criminal risk assessment algorithms, none of which have been revalidated in nearly 10 years. Moreover, it is based on data that the original validator of the tool questioned. Criminal justice professor Edward Latessa noted that due to a number of factors, it carried the potential for significant measurement error.

The state of affairs regarding Colorado's CPAT tool truly represents the wild west, offering nothing less than a complete shotgun approach to pretrial release.

It is time for criminal defense lawyers and civil rights lawyers to call for an immediate stop to use of the algorithm. Also, judges must insist that their courtrooms be kept free of this potentially biased, junk science. At the same time, the state legislature must not be deceived into believing this risk assessment-based system will somehow decrease mass incarceration. They should be reminded that the same system implemented 35 years ago at the federal level has tripled pretrial incarceration. Lastly, the public needs to rise up in indignation and contact their local officials to tell them that they will no longer tolerate the current shameful state of affairs.

Justice by algorithm is not what it has been claimed to be and Coloradoans across the board should be wary of a deeply flawed system which does not serve the public's best interests.

Facebook Comments