Algorithm Using Racist Drug War Data to Determine Missourians’ Freedom

(excerpt from Filter - July 29 2019)

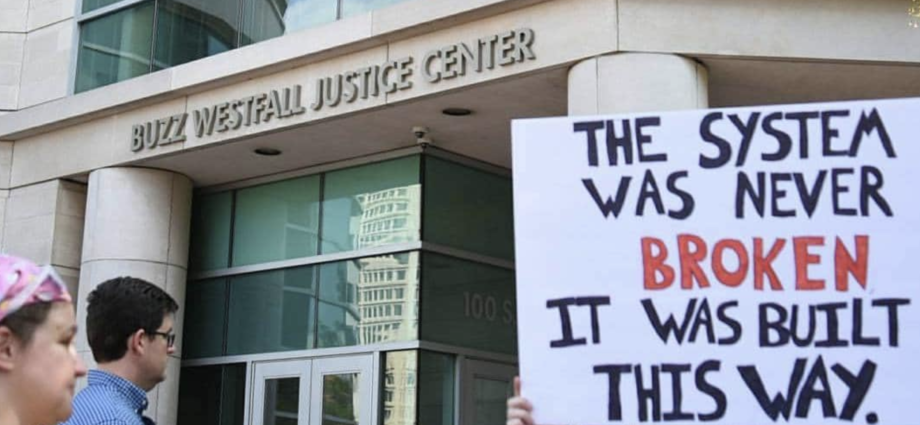

An algorithm based on data from decades of drug war policing that disproportionately targets Black Missourians will soon, in part, determine whether or not a defendant is jailed before trial. The plan—recently instituted by the Supreme Court of Missouri and will be effective in 2020—is under scrutiny from researchers and activists for having racism, quite literally, programmed into it.

The state Supreme Court’s Rule 33.01 was published on June 30, after the Eastern District of Missouri court ruled to end money bail for indigent defendants earlier that month. It ordered other courts to consult “evidentiary-based risk assessment tool[s]” when deciding whether to “detain” or “release” defendants.

The risk assessment tools use a mathematical formula to estimate the probability that a defendant will be arrested again or miss their court appearance. A risk score is calculated, to be used by a judge when considering pretrial conditions.

Supporters of actuarial tools in the pretrial context claim that risk scoring assists in fulfilling a reform-minded goal: reducing the use of money bail and pretrial detention.

“We have to evaluate each person in and of themselves and use whatever set of facts we have about who they are. If we can do this, we will have a better system, fairer outcomes, and less incarceration,” said Wilford Pinkney, Jr., an academic and former New York police detective now working with the St. Louis Department of Public Safety to develop “alternatives to cash bail.” “The ultimate goal is to decrease the jail population.”

But three researchers from the Harvard Law School Criminal Justice Program and MIT Media Lab sent a July 17 letter to Missouri Supreme Court judges denouncing the use of pretrial risk assessments in the name of bail and pretrial detention reform. They wrote that these tools “suffer from serious methodological flaws that undermine their accuracy and effectiveness.”

In an attached position paper, MIT research scientists Karthik Dinakar and Chelsea Barabas, Harvard Law staff attorney Colin Doyle, along with 24 other researchers, explained that the tools draw from “deeply flawed data, such as historical records of arrests, charges, convictions, and sentences.”

The criminal histories being used for the artificial intelligence calculations are colored by arrest patterns that, for example, disproportionately involve Black Americans criminalized for marijuana use. Across the country, Black people aged 27 are 235 percent more likely to be arrested than their white counterparts for marijuana law violations—even though both demographics use cannabis at equivalent rates, noted the researchers.

The risk assessment tools’ racial bias and failure to “increase the likelihood of better pretrial outcomes, much less guarantee” them, as the researchers write, could have devastating effects in St. Louis, a city with slightly more Black residents (47 percent) than white ones (45 percent). Of over 16,700 people being detained before their trial in St. Louis in 2016, three Black defendants were held for every one white defendant. Black Missourians also made up three-quarters of clients seeking money bail assistance from The Bail Project’s St. Louis location, according to the district court ruling.

The judges involved in the Missouri decision did not respond to Filter‘s request for comment by publication time.

Facebook Comments